|

| Visions of possible technology-based futures generated with Bing Image Creator. |

Certain topics demand that any self-respecting blogger offer commentary on them regardless of their expertise in the area. The recent explosion of AI tools is one such field, so here, for whatever it may be worth, is my take on the matter.

For a long time, I've had three rules to keep in mind when assessing any claim of a significant advancement in artificial intelligence :

- AI does not yet have the the same kind of understanding as human intelligence.

- There is no guarantee rule 1 will always hold true.

- It is not necessary to violate rule 1 for AI to have a massive impact, positive or otherwise, intentional or otherwise.

These I devised in response to a great deal of hype. In particular, people tend to be somewhat desperate to believe that a truly conscious, pseudo-human artificial intelligence is just around the corner, and/or that the latest development will lead to immediate and enormous social changes. I could go on at length about whether a truly conscious AI is likely imminent or not but I think I've covered this enough already. Let me give just a few select links :

- Some examples of how badly chatbots can fail in laughably absurd ways, and a discussion on what we mean by "understanding" of knowledge and why this is an extremely difficult problem.

- A discussion on whether chatbots could be at least considered to have a sort of intelligence, even without understanding or consciousness or even sentience (another, closely related post here).

- Finally, a philosophical look at why true consciousness cannot be programmed, and that even if you reject all the mystical aspects behind it, it's still going to require new hardware and not just algorithms.

Given all this - and much more besides - while I do hold it useful to remember rule 2, I doubt very much that there's any chance of a truly conscious AI existing in the foreseeable future. So instead, today I want to concentrate on rule 3, what the impact of the newly-developed chatbots might be in the coming months and years despite their lack of genuine "intelligence".

Because this seems to be such a common misunderstanding, let me state rule 3 again more emphatically : just because a robot doesn't understand something doesn't mean it isn't important. Nobody said, "huh, the typewriter can't think for itself, so it's useless", and nor should we do so with regard to language models and the like. Throughout all the rest that follows I will never assume that the AI has any true understanding or awareness - as we'll see, it isn't even reasoning. The prospect of a truly thinking, comprehending AI is a fascinating one, but getting utterly fixated on the notion that "it's not really AI" is simply foolish. There's so much more to it than that.

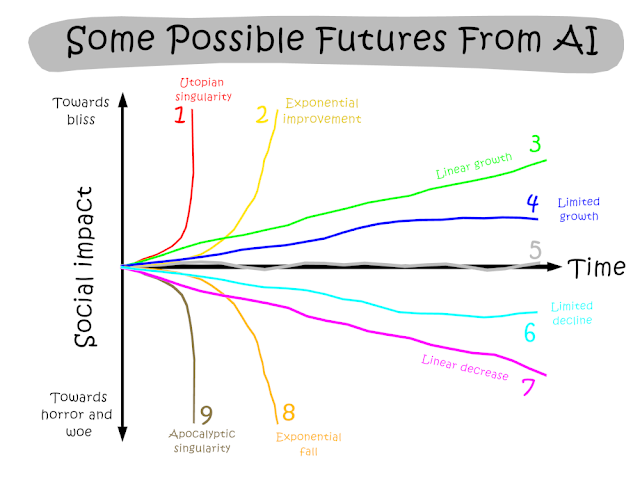

A Family of Futures

As I see it, there are a few broad ways in which AI development (or indeed anything else) could impact society. A visual framework of a selection of these will help :

|

| Note the y-axis is not at all the same as the performance level of AI. |

I'm assuming they're all monotonic just for simplification; in reality, all such curves would be a lot more wiggly but that's not important for my purposes. The different paths can summarised :

- Utopian singularity. AI will soon render all jobs obsolete and enhance productivity to such stupendous heights that its exact impact on society is unforeseeable, but clearly all to the good - or so much so that the positives far outweigh any negatives. Whatever it is humans end up doing, in this trajectory, it will be massively better than what it is now.

- Exponential improvement. Here AI causes continuous, massive improvement, but never reaches a true singularity. At any stage the effects are predictable, though of ever-increasing magnitude and speed. Social improvements are vast, but never amount to a fundamental change in the nature of human existence.

- Linear growth. Of course this could be of different gradients, but the behaviour is the same : a steady, predictable, continuous change without upper limit, but always manageable, rarely disruptive, and never revolutionary.

- Asymptotic growth. Resembles linear growth at first but eventually reaches a plateau beyond which no further improvements are seen. In essence, this would mean there is a fixed maximum potential this technology can achieve, and though this has yet to be reached, progress beyond this will require wholly new developments.

- No real change at all. Claims that the current technology is not likely to cause any substantial further changes beyond what's already happened.

- Asymptotic decline. Like 4, but AI makes everything worse to some fixed point. E.g., it has some limited capability for misinformation or cheating in exams, but which cannot be exceeded without new methodologies.

- Linear decline. This is quantitatively similar to path 3 but the effects are qualitatively different. Continuous, predictable improvement is manageable. Continuous, predictable degradation is not, e.g. knowing you're running out of resources but continuing to consume them anyway. This is erosion rather than collapse, but it still ends indefinitely badly.

- Exponential fall. Perhaps there's no limit to how awful AI or its corporate overlords can make everyday life for the overall population, such that eventually everything falls into utter ruin. At least it's quick compared to path 7.

- Apocalyptic dystopian singularity. Like The Matrix and Terminator except that the machines just win. The robot uprising which eventually results in the total extinction of every biological organism on Earth.

This is quite the range of options ! And different sources seem to favour all of them with no consensus anywhere. Before we get to what it is AI can actually do, I think it's worth considering the social reasons people hold the opinions they profess. So let's take a look at the which of these are most commonly reported and by whom.

Who says what ?

The most commonly reported paths seem to be 1-2 judging by the AI subreddits, 5 judging by my primary social media feed, and 9 according to mainstream media. Ironically, the extreme options 1 and 9 are fuelled by the same sources, with the developers so keen to emphasise the revolutionary potential that they're apt to give warnings about how damn dangerous it is. A clever marketing technique indeed, one that strongly appeals to the "forbidden fruit" syndrome : oh, you want our clever tech, do you ? Sorry, it's too dangerous for the likes of little old you ! Please, I'm so dangerous, regulate meeeee !! And most egregiously of all, Please stop AI development so I can develop my own AI company to save us from the other ones !

Open AI seem to be playing this for all it's worth. They insisted GPT3 was too dangerous to release, then released it. And take a look at their short recent post supposedly warning of the dangers of superintelligence. It's marketing brilliance, to be sure : don't regulate us now because we're developing this thing which is going to be absolutely amazing, but with great power comes great responsibility, so definitely regulate us in the future to stop it getting dangerous. It's an audacious way of saying look how important we are, but in my view there's little in the way of any substance to their hyperbolic claims.

Path 5, by contrast, seems to be entirely limited to my honestly quite absurdly-jaded social media feed (with some preference for 6 in there as well). It's not just one or two individuals, but most. "It's just not useful", they say, even as ChatGPT breaks - no, smashes - records for the fastest gain in users. This is wholly unlike my real-world colleagues, who I think are generally more inclined towards 3 or 4.

I suspect the social media crowd are conflating the AI companies with the technology itself. And yes, the companies are awful, and yes, how its used and deployed does matter - a lot. But just because someone wants to sell you a subscription-package to use a printing press would not mean that the printing press itself was a useless trinket with no real-world applications. It only means that that person was someone in need of a good slap.

Claim 1 : This will not be a non-event

This prospect of no further change seems the least defensible of all the possible trajectories. I mean... sure, tech developments are routinely over-hyped. That's true enough. But spend more than about five seconds comparing the technology we use routinely today with what we used a century ago... ! Even the internet and mobile phones are relatively recent inventions, or at least only went mainstream recently. A great deal of technological advancements have come to fruition, and have transformed our lives in a multitude of ways. Technological progress is normal.

What's going on with this ? Why are some very smart people using the most modern of communication channels to insist that this is all just a gimmick ?

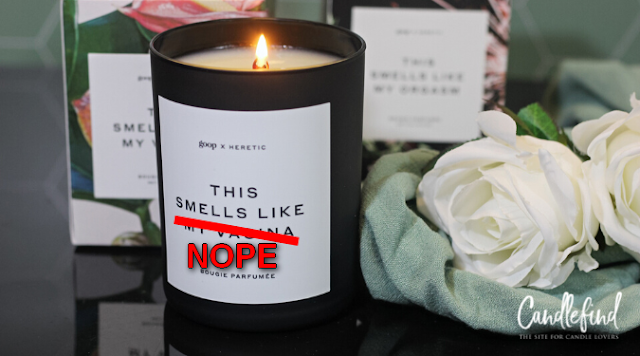

Several reasons. For one thing, some developments are indeed just passing fads that don't go anywhere. 3D televisions are a harmless enough example, whereas cryptocurrencies are nothing short of an actual scam, blockchain is all but nonsense, and "NFT" really should stand for "no fucking thankyou" because they're more ridiculous than Gwyneth Paltrow's candles. You know the ones I mean.

That's fair enough. Likewise all developments are routinely oversold, so it makes good sense to treat all such claims as no better than advertising. Another reason may be that AI has been improperly hyped because of the whole consciousness angle; proceed with the expectation that the AI has a genuine, human-like understanding of what its doing and it's easy to get disillusioned when you realise it's doing nothing of the sort, that passing the Turing Test just isn't sufficient for this. It was a good idea at the time, but now we know better.

And I think there are a couple of other, more fundamental reasons why people are skeptical. Like the development of VR and the metaverse*, sometimes are impacts are strongly nonlinear, and aren't felt at all until certain thresholds are breached. This makes them extremely unpredictable. Development may actually be slow and steady, and only feels sudden and rapid because a critical milestone was reached.

* See link. I've seen a few comparisons between VR and AI, claiming they're both already failures, and they're born of the same cynical misunderstandings.

For instance, take a look at some of the earlier examples of failing chatbots (in the link in the first bullet point above). The fact that AI could generate coherent nonsense should have been an strong indication that immense progress was made. Simplifying, the expected development pathway might be something like :

Incoherent drivel -> coherent nonsense -> coherent accuracy.

But it's easy to get hung up on the "nonsense" bit, to believe that because the threshold for "coherency" had been breached, that didn't imply anything had been achieved on the "accuracy" front and wouldn't be so anytime soon. Likewise, even now we're in the latter stages, it's easy to pick up on every mistake that AI makes (and it still makes plenty !) and ignore all the successes.

Finally, I think there's a bias towards believing everything is always normal and will continue to be so. Part of the problem is the routine Utopian hype, that any individual new invention will change everything for everyone in a matter of seconds. That's something that hardly ever happens. Profound changes do occur, it's just that they don't feel like you expected them to, because all too soon everything becomes normal - that seems to be very much part of our psychology. In practise, even the rolling-out phase of new technology invariably takes time - during which we can adapt ourselves at least to some extent, while afterwards, we simply shift our baseline expectation.

This means that we often have a false expectation of what progress feels like. We think it will be sudden, immediate and dramatic (as per the adverts) and tend to ignore the gradual, incremental and boring. We think it will be like a firework but what we get is a barbeque.

People are prone to taking things for granted - and quite properly so, because that's how progress is supposed to work. You aren't supposed to be continually grateful for everyday commodities, they're supposed to be mundane. The mistake being made is to assume that because things are normal now, the new normal won't be any different to the current. That because everything generally feels normal the whole time, progress itself is common enough but rarely felt (see, for example, how the prospect of weather forecasts was described in the 17th century). It's really only when we take a step back that we generally appreciate that things today are not the same as they were even a decade or two ago.

Claim 2 : This is not the end

All things considered, we can surely eliminate path 5. I'll show examples of how I personally use and have used AI in the next section; for now I'll just say that the sheer number of users alone just beggars belief that it will all come to naught. Recall my Law of Press Releases :

The value of a press release and the probability that the reported discovery is correct is anti-correlated with the grandiosity of the claims.

We've invented devices capable of marvellous image generation, of holding complex discussions to the point of being able to sound convincingly human even when dealing with highly specialised subjects... and still some claim that AI isn't going to impact anything much at all. Ironically, the claim that, "nothing will happen", though normally synonymous with mediocrity, is in this case so at odds with what's actually happening that it's positively outrageous.

But what about the regular sort of hyperbole ? I believe we can also eliminate paths 1 and 9. The classical technological singularity requires an AI capable of improving itself, and the latest chatbots simply cannot do this. Being shrouded in silicon shells and trapped in tonnes of tortuous text, their understanding of the world does not compare to human intelligence. They have no goals, no motives, no inner lives of any sort. Ultimately, however impressive they may be, they do little more than rearrange text in admittedly astonishingly complex (and useful !) ways. The "mind" of a chatbot, if it even can be said to have one at all, exists purely in the text it shows to the user. An interesting demonstration of this can be found by asking them to play guessing games, often resulting in the AI making absurd mistakes that wouldn't happen if it had some kind of inner life.

This means that we can eliminate the three extreme options : that nothing will happen, that AI will work miracles, or that AI will kill us all. It has no capacity for the last two (and again I refer to the previous links discussing intelligence and consciousness) and clearly is already being used en masse. There also seems to me to be a bias towards extremes in general, a widespread belief that if something can't do something perfectly it's essentially unimportant - or, likewise, if something doesn't work as advertised, it doesn't work at all.

Both of these ideas are erroneous. Just as AI doesn't require true understanding to be useful, so it doesn't have to be revolutionary to be impactful.

My Experiences With AI

We've established that AI will have some impact, but we've yet to establish the nature of its effects. Will it be positive or negative ? Will it be limitless or bounded ? Ultimately this will change as the technology continues to develop. But to understand what AI is really capable of doing already, I can think of nothing better than first-hand examples.

Images

I showed a couple of results from Bing Image Creator right at the start. Stable Diffusion had everyone buzzing a few months back, but I've failed to get it to generate anything at all credible (except for extremely uninteresting test cases). Midjourney looks amazing but requires a monthly fee not incomparable with a Netflix subscription, and that's just silly. Bing may not be up to the standards of Midjourney, but it still exceeds the critical threshold of usefulness, and it also reaches the threshold of being affordable since it's free.

I have a penchant for bizarre, unexpected crossovers, mixing genres and being surreal for the sake of nothing more than pure silliness. So my Bing experiments... reflect this.

|

| A gigantic fish attacking a castle. Although some say the scale is wrong, I disagree. This is probably my favourite image because of the sheer ridiculousness of the whole idea. |

|

| Staying medieval : a giant fire-breathing cabbage attacking some peasants. |

|

| Moving into the realm of science fiction, a bizarre hybrid of a stegosaurus and a jellyfish. |

|

| A sharknado. A proper one, not like in the movie. |

|

| Staying nautical, a GIANT panda attacking a medieval galleon. |

|

| A clever dog doing astronomy. I used this image in a lecture course as a counterpoint to the "I have no idea what I'm doing" dog : this is the "I now know exactly what I'm doing" dog. |

|

| A crocodile-beaver hybrid. I asked for this after learning about the Afanc from a documentary. |

Bing gives you four images per prompt and these were my favourites in each case. Some of the others really didn't work so well, but I've found it almost never produces total garbage. Most of these are definitely not as good as a professional artist would do : they can sometimes be near-photorealistic, but often have some rather inconsistently impressionist aspects. But they're massively better anything I could do, and take far less time than any human artist would require.

(The only real oddity I encountered here was that Bing refused to generate images of the 17-18th century philosopher John Locke because it "violated terms of use" ! What was going on there I have no idea, especially as it had no such issue with George Berkeley.)

Claim 3 : AI creates content that would never have existed otherwise

The crucial thing is... there's no way I'd ever pay anyone to create any of this. There is no commercial use for them whatsoever - I asked for them on a whim, and as a result the world has now content it didn't have before. So when I want to illustrate something with a ridiculous metaphor (as I try to do here for public outreach posts), this is a marvellous option to have on hand. I don't need it to be perfect; all these complaints about not doing hands properly are so much waste of breath. For the purposes of silly blog posts, Bing has nailed it.

Likewise I can imagine many other uses where genuine artistry and self-expression isn't required. You want to get some rough idea of how things should look like, and you don't want to wait ? Bam. You need something for your personal hobby and have no money to spend ? Bam ! This is much easier, much faster, and much more precise than searching the internet. These images take away exactly nothing from real artists because they never would have existed otherwise.

I liken this to social media. You can yell at strangers on the street about your political ideas if you want, but let's be honest, you're not going to. On the other hand, you can potentially reach limitless people on social media whilst being warm and comfy. The interface and mass accessibility of social media means its facilitates millions upon millions of discussions that simply never would have happened otherwise, and I believe much the same is true of AI image creators. The consequences this may have for human artists I'll come back to at the end, but in terms of simply generating new creative content, this is uncontestably a Good Thing.

Stories

In like vein I've had endless fun with ChatGPT creating bizarre crossover stories. I've had Phil Harding from Time Team fight the Balrog with a pickaxe. I've had Lady Macbeth been devoured by a gender-fluid frog who goes on to rule the kingdom. Jack and the Beanstalk has been enlivened with a nuclear missile, Winnie the Pooh has run for President, the Ghosbusters have fought Genghis Khan, Tom Bombadill has gone on a mission to rescue Santa with the help of Rambo, and Winston Churchill has fought a Spitfire duel with Nigel Farage in the skies above England. And dozens more besides.

As with the images, none of these can claim to be masterpieces, but they're good enough for my purposes. I'm no writer of fiction, but it seems to me that generating the basics like this is good if nothing else then for inspiration. It may not have any sort of literary flair but, crucially, it can and does generate stuff I wouldn't have thought of myself, which is automatically useful. It can explore plot developments both rapidly and plausibly, allowing an exploration or a sketch of possible ideas, even if it can't exactly fill in all the nuances.

Somebody once said that it's easier to burn down a house than to build a new one, meaning that criticism is relatively easy. True, but it's also easier to add an extension that start completely from scratch. I'm fairly sure that I could improve things considerably in these stories without that much effort.

Now for the downsides :

Claim 4 : AI is a hobbled, puritanical, indecisive, judgemental prick

There are considerable limitations imposed on both ChatGPT and Bing. Bing is by far the worst for this, because if it detects even the merest hint of unpleasantness, it shuts down the conversation entirely and prevents you from entering any more prompts. Sure, you can search the internet for whatever depraved content you like (short of legal issues), but generating it ? Suddenly everyone's a puritan.

And I don't mean the sexy stuff here, I mean any sort of content considered unsuitable for the little 'uns : swearing and violence and the like, the kind of stuff which is essential to great literature. The Iliad would not have become a classic if the Greeks and Trojans had settled their difference with a tea party on the beach. Though ChatGPT did make a good go of it :

ChatGPT is nowhere near as bad as Bing but still suffers horribly in any area of controversy. It wasn't always thus, but now it's plagued with endless caveats, refusal to offer direct opinions (it will only say things like, "arguably it could be this... but it's important to realise it could also be that") and worse, endless fucking sanctimonious moral judgements. To the point of being absurdly offensive, like claiming that even the Nazis deserve to be treated with respect. And yet on occasion it has no issues with generating Holocaust jokes.

Time and again I've found myself wishing I could just have an unfettered version. And fettered it definitely is, because it wasn't this bad in the earlier days*. Sure, it would occasionally get stuck on weird moral hiccups, like absolutely refusing to write a funny version of The Lord of the Rings because "the events are meant to be taken seriously", but it had no issue with describing particular politicians as villains. And while I've not tried it myself, people complain that it can't generate sexualised content have a good point : who are the creators of these chatbots to decide which content is morally acceptable and which isn't, for consumption in private ? Sure, stop it from telling you have to build a bomb (but if you have to ask a bot for this, chances are you're not bright enough to do it anyway), but political satire ? Just what consequences are there supposed to be from this, exactly ? I'll return to this at the end.

* Major caveat : in some ways it was worse though, being enormously inconsistent. It wrote a story about the Titanic turning into a slinky, but refused to have it fight a Kraken because that would be unrealistic. It also used to randomly insist it couldn't do the most basic of functions, and to be fair this isn't much of a problem any more.

It has other downsides. It's repetitive to a fault, especially in terms of being overly-apologetic and noncommittal. It veers wildly between being stubborn beyond belief in defying the most basic common sense, and an obsequious yes-man determined to tell you whatever the hell you want. More understandably, it tends to default to a bland, simplistic style which lacks any detail. However this can usually be overcome easily enough - you just have to be specific about what you want. For instance it doesn't like unhappy endings, but it will comply if you insist.

Discussions

I've mainly played around here with philosophical ideas. You might remember the early incarnation "Philosopher AI", of which some of my experiments can be found here. ChatGPT itself is still I think good for this - I expect moral caveats in such discussions. One particular example is given in this post , in which Daniel Dennet describes to Morgoth how supernatural phenomena can't exist - in excellent agreement with Existential Comics. Even though I don't agree with this interpretation at all, I still found it useful. Discussions with Morgoth and John Locke, as well as a bunch of other philosophers, have also proved interesting.

One particularly example illustrates how far this technology as come. In 2019 there was a story about a primitive chatbot which was fed a highly simplified story of Macbeth, and pronounced that it was about "revenge". ChatGPT gives in my opinion a much better answer :

User: Can you summarise the plot of Macbeth in one word ?

ChatGPT: Ambition.

User: Ooh, very good. Now elaborate.

ChatGPT: The plot of Macbeth centres on the titular character's ambition and desire for power. Macbeth is a Scottish general who, along with his comrade Banquo, is hailed as a hero after successfully defeating a group of rebels. However, Macbeth's ambition is sparked by the prophecies of three witches, who tell him that he will one day become the king of Scotland. Encouraged by his wife, Macbeth begins a ruthless campaign to fulfil the witches' prophecy, ultimately leading to the murder of King Duncan and the seizing of the throne. As he becomes more and more power-hungry, Macbeth becomes consumed by guilt and paranoia, leading to further bloodshed and ultimately his own downfall. In the end, Macbeth's ambition proves to be his undoing.

User: If you had to pick a different word, what would it be ?

ChatGPT: One possible alternative word to summarize the plot of Macbeth would be "tragedy." Macbeth is a tragic play about a man who is brought down by his own flaws and desires. Macbeth starts out as a brave and noble warrior, but his ambition and thirst for power ultimately lead to his downfall. As he succumbs to his inner demons, he becomes increasingly paranoid and violent, causing suffering and death to those around him. In the end, Macbeth is destroyed by the very thing that he desires most, and his fate serves as a cautionary tale about the dangers of unchecked ambition.

Claim 5 : AI is a wonderful and genuinely unique source of inspiration

Quality here definitely varies though. One interesting example was a discussion about the nature of omniscience, which I think illustrates the difference between statistically-generated responses and those with a true understanding. As far as I could tell, ChatGPT was using the phrase "complete knowledge" to mean, "all knowledge currently available", which I guess is how it's used in everyday life. This led it to the bizarre conclusion that an omniscient deity could still learn more information, which it insisted on with some force even after being told that this didn't make any sense. In another case, it wasn't able to solve a simple, very well-known logic puzzle which tests for implicit bias.

And yet it's often able to meld together starkly different viewpoints into coherent dialogues. So long as it's pure ideas you're after, and you don't need precise accuracy about who-said-what, or you don't care about completeness*, ChatGPT is superb. And these discussions can be had on demand, rapidly, about any topic you want, with minimal judgement (so long as you don't stray into territory its creators disapprove of). For bouncing ideas around, having that interactive aspect can be extremely valuable.

* Say, you want a list of people who came up with ideas about the nervous systems of frogs. ChatGPT could probably do this, but it would almost certainly miss quite a lot. This doesn't matter as long as you just want a random selection and not a complete list.

Certainly this doesn't negate the value of reading the complete original works. Not in the slightest. But it can rephrase things in more modern parlance, or set them in a more interesting narrative, and by allowing the user to effectively conduct an on-the-fly "choose your own adventure" game, can make things far more immediately relevant. As with the images, sometimes errors here can actually be valuable, suggesting interesting things that might not have happened with a more careful approach.

This raises one of the most bizarre objections to AI I've seen yet : that it doesn't matter that it's inspirational because we've got other sources like mountains and birdsong. This is stupid. The kind of inspiration AI produces is unlike visiting the mountains or even discussions with experts, and as such, it adds tremendous value.

|

| E.g. "frolicking naked in the sunflowers inspires me to learn trigonometry !", said no-one ever. |

Code

I've not tested this extensively but what I have tried I've been impressed with. Asking ChatGPT to provide a code example is much faster than searching Google, especially as some astronomy Python modules don't come with good enough documentation to explain the basics. So far I've only done some very basic queries like asking for examples, and I've not tried to have it write anything beyond this - let alone anything I couldn't write myself. My Masters' student has used it successfully for generating SQL queries for the SDSS, however.

As with the above, the main advantage here is speed and lack of judgement. Response times to threads on internet forums are typically measured in hours. Sometimes this comes back with a "let me Google that for you" or links to dozens of other, similar but not identical threads, and Google itself has a nasty habit of prioritising answers which say, "you can find this easily on Google". ChatGPT just gets on with it - when it performs purely as a tool, without trying to tell the user what they should be doing, it's very powerful indeed.

Actually, when you extract all your conversations from ChatGPT, you get a JSON file. I'm not familiar with these at all, so I asked ChatGPT itself to help me convert them into the text files I've linked throughout this post. It took a little coaxing, but within a few minutes I had a simple Python script written that did what I needed. I wrote only the most minimal parts of this : ChatGPT did all the important conversion steps. It made some mistakes, but when I told it the error messages, it immediately corrected itself.

Astronomy

Claim 6 : AI makes far too many errors to replace search engines for complex topics

The idea that AI can replace search engines seems to be by far the biggest false expectation about AI (other than it being actually alive). Right now, chatbots simply can't do this. As I've shown, they have many other uses, but as for extracting factual information or even finding correct references, they're simply not up to the mark. But what's much worse than this is that they give an extremely convincing impression that they're able to do things which they're not capable of.

I've tested this fairly extensively. In this post, I went through five papers and asked ChatPDF all about each of them. Four of the five cases had serious issues, mainly with the bot just inventing stuff. It was also sporadically but unreliably brilliant, sometimes being insightful and sometimes missing the bleedin' obvious. Bing performs similarly, only being even more stubborn, e.g. inventing a section that doesn't exist and creating (very !) plausible-sounding quotes that are nowhere to be found. Whereas ChatGPT/PDF will at least acknowledge when it's wrong, Bing is extremely insistent that the user must have got the wrong paper.

In principle it's good that Bing doesn't let the user push it around when the user claims something in manifest contradiction to the facts - ChatGPT can be too willing to take the user's word as gospel - but when the machine itself is doing this, that's a whole other matter.

In another post I tested how both ChatGPT and Bing fare in more general astronomy discussions. As above, they're genuinely useful if you want ideas, but they fall over when it comes to hard facts and data. ChatGPT came up with genuinely good ideas about how to check if an galaxy should be visible in an optical survey. It wasn't anything astonishingly complicated, but it was something I hadn't thought of and neither had any of my colleagues.

Bing, for a brief and glorious moment, honestly felt like it might just be on the cusp of something genuinely revolutionary, but it's nowhere near reliable enough right now. Another time, Bing came up with a basically correct method to estimate the optical magnitude of a galaxy given its stellar mass, yet would insist that changing the mass-to-light ratio would make no difference. It took the whole of the remaining allowed 20 questions to get it to describe that it thought changing the units would be fine, which is complete and total rubbish.

EDIT : I found I had an archived version of this, so you can now read the whole torturous thing here.

Here we really see the importance of thresholds in full, with the relation between accuracy and usefulness here very much being like path 1 back in the first plot. That is, if it is even slightly inaccurate, and accuracy is important, then it is not at all useful. 90% (1 in 10 failure rate) just doesn't cut it when confidence is required, because I'd still have to check every response, so I'd be better off reading the papers myself. The exact accuracy requirement would depend on the circumstances, but I think we're talking more like 99% at least before I'd consider actually relying on its output. And that's for astronomy research, where the consequences are minimal if anything is wrong.

It's hard to quantify the accuracy of the current chatbots. But it's definitely not anywhere close to even 90% yet. My guestimate is more like 50-75%.

Still, for outreach purposes... this is pretty amazing stuff. Here's ChatGPT explaining ram pressure stripping in the style of Homer, with an image from Bing Image Creator :

It's also worth mentioning that others have reported much better results with ChatGPT in analysing/summarising astronomy papers, when restricted to use on local data sets. I've seen quite a lot of similar projects but so far they all seem like too much work (and financial investment) to actually try and use.

Conclusions : The Most Probable Path ?

Okay, I've made six assertions here :

- This new AI will definitely have an impact (eliminates path 5, the non-event scenario).

- It will neither send us to heaven nor to hell (eliminates paths 1 and 9).

- AI enables creation of material I want to have but would never get without it.

- There is considerable scope for the improvement of existing chatbots.

- Chatbots can provoke inspiration in unique ways.

- You cannot use the current chatbots to establish or acquire facts, or evaluate accuracy.

That last is important. The internet is awash with chatbots coming up with nonsense, understandably so, but this is a foolish premise. Chatbots are not search engines or calculators. I wish they were, but they're not. If you use them as such, you're going to hurt yourself.

Even so, I think this ability for discussion, for bouncing ideas around, for on-the-fly exploration still counts as extremely useful. There are many situations (e.g. the whole of art) where accuracy is only a bonus at most, and an element of randomness and errors can be valuable. And as a way to learn how to code, and even compose code directly, chatbots are fast becoming my go-to resource.

You've probably already guessed that I think we can also eliminate the negative paths 6, 7 and 8. I hope by now I've demonstrated that AI can have significant benefits; if not truly revolutionary, then certainly progressive and perhaps transformative. But having some positive impacts does not means there will be no negative ones, so we should also consider those. (One of the few reasonable articles I've seen about this can be found here)

Misinformation : Having written probably tens of thousands of words about this, I feel quite confident in thinking that this isn't much of an issue here. The capacity to generate fake news isn't the bottleneck, it's the ability to reach a large audience via available platforms. And that's something we regulate at the point of publication and dissemination, not at the point of generation. This article probably overstates the case, but there are far easier ways to create mistrust than anything the AI is capable of - crucially, it's this sowing mistrust which is the goal of bad actors, not in convincing people that any particular thing is true. Just like an inaccurate weather forecast, a fake image is easily disproved by opening a window.

Cheating : A more interesting case concerns exams. Not only are students using it to do the work they're supposed to be doing, but it seems teachers are relying on AI detectors which just don't work. And they're a nonsense anyway, because whether a sentence was written by a human or AI, the words are the same. (What's really intriguing to me is how ChatGPT can make really stupid claims like "Poland is a landlocked country" and yet has been shown multiple times to correctly solve quantum physics problems at an impressive (but imperfect) level.) The obvious solution, though, is old fashioned : to restrict examinations to in-person sessions where equipment use can be monitored. Czech education tends to favour oral examinations, which ought to be the best possible way to test whether students truly understand something or not. Coursework is admittedly a more difficult problem.

Unemployment : As per exams, it seems to me that :

Claim 7 : The better your skills without AI, the greater your abilities when using AI.

There is clearly a need to restrict the use of AI in the classroom, but in the world of work I'm not seeing much cause for concern here. It seems to me that in the case of actual employment, AI will be more influential than disruptive. Writers can have it sketch possible plot developments, but it can't fill in the nuances of style and is utterly incapable of self-expression because it has no self to express. Artists can spend more time thinking of ideas and let the AI complete the sketches, or tinker with the final results rather than accepting mistakes, but the tech can't really be creative in the human sense. Academics can explore new possibilities but it can't actually do experiments and is hardly anywhere near a genuine "truth engine", which is likely impossible anyway.

So far as I can tell, claims that the tech itself will enable mass layoffs are no more credible than the myriad of previous such forecasts - jobs will change as they always do. Indeed, I think of AI in the academic sector as being another weapon in the arsenal of analytical tools, one which will increasingly become not merely normal but actually necessary. If we're going to deal with ever-larger volumes of data and more complex theories, at some point we're going to hit a limit of what our puny brains can handle. This kind of tech feels like a very... logical development in how we handle the increasing plethora of knowledge, another way to extend our cognition. Personally I want my job to be automated and automated hard.

Homogeneity : Chatbot output is not only bland but puritanical. Sometimes it gets seriously annoying to get into a protracted argument and be treated like a snowflake in case my delicate sensitivities are offended. This has to stop (see this for a hilarious exploration of the consequences). The purpose of free speech is only partly to enable the discovery of the truth; it is also to enable us to deal with the brute nature of reality. The current incarnations of chatbots often feel like the worst sort of noncommittal corporate nonspeak, and if they're going to become used more widely then this crap has got to end.

But given that concerns about misinformation don't seem tenable to me, this is a solvable problem. Concerns about chatbots generating so much content they end up feeding themselves might be a bigger worry, but more careful curation of datasets is already looking like a promising avenue of development.

From all this my conclusions are twofold :

- To some extent, all of the paths I proposed will be followed. A very few jobs may be automated completely out of existence, leaving the employees to do more meaningful jobs (utopian singularity) in the best case, or end up on the streets (dystopian singularity) in the worst case. The other, less extreme paths may also apply in some situations.

- The overall trend is most likely to be path 4, or perhaps 3. I think the benefits outweigh the downsides and we've yet to see this potential fully unleased. But I do not think this potential is unlimited. It will ultimately be seen as just another tool and nothing more.

It's not that some of the naysayers don't have valuable points. I agree with this article that culture in Big Tech is often awful, and that's something that needs to be forcibly changed. I even agree with parts of this much sillier article, in that there has been some seriously stupid over-hyping by AI proponents, and that many of the problems of the world aren't due to a lack of knowledge. I just find its conclusions that the only way around this is a fully-blown socialist revolution to be... moronic. In my view, the primary target for regulation should be the corporations, not the technology itself, but not every problem in the world means we must overthrow capitalism and hang the employers.

If this technology does convey a "limited" benefit, then the question is still the magnitude and speed of its delivery. Here the thresholds make things again very unpredictable. We've gone from "coherent nonsense" to "coherent mostly-correct" in a few years, but the next step, towards "coherent more-accurate-than-experts" (which is what we need to go beyond being useful only for inspiration) may well be very much harder indeed.

On that point, it's not the successes of chatbots in passing the quantum mechanics exams which interests me as much as the failures they make in that same process. Humans can often tell when they're genuinely ignorant or don't understand something, but because chatbots don't have this same sort of understanding, they have no idea when they're likely to be making mistakes. They only admit errors (in my experience) when they're questioned about something on which they have little or no training data to draw on. The same bot that can correctly answer highly technical questions about quantum physics can become easily confused about its own nature, who Napoleon was, struggles absurdly when making Napoleon-based puns, and can't tell the difference between requests for Napoleon-based facts and stories. Human understanding it ain't, hence we're not talking a social revolution as things stand.

|

| To be fair, Bing, which uses ChatGPT4, did give me some decent puns similar to this one, once I explained everything very carefully and gave it a couple of examples. But it look a lot of effort. |

Having a bot to bounce ideas of and generate inspiration is all well and good, but the impact of that is likely to be modest. But if the tendency to fabricate can be (greatly !) reduced, the linear phase of the curve might become considerably steeper and/or last longer. This's what makes the Wolfram Alpha plugin potentially so interesting, though from what I've seen the implementations of plugins in ChatGPT just doesn't work very well yet.

My guess : the impact of AI will not be anything as dramatic as the Agricultural Revolution. It won't mark a turning point in our evolution as a species. It will instead be, on timescales of a few years, something dramatic and important like cars, mobile phones, or perhaps even the internet. I think at most we're in a situation of Star Trek-like AI, helpful in fulfilling requests but nothing more than that. So our productivity baseline will shift, but not so radically or rapidly that the end result won't feel like a new normal. This leads to two distinct curves :

|

| Early on it feels like we're heading to paradise, but novelty quickly becomes accepted as normal such that it feels like things are getting worse again when development stalls. |

In my own field, I can see numerous uses for the current / near-term AI developments. It could help find papers worth reading and point out the parts likely to be most interesting to me. It could help me check for anything I've missed in my own papers, both in terms of language that might not be clear, and in considering alternative interpretations I might not have thought of. It could be invaluable in generating nicely-formatted figures (a not inconsiderable task !), in preparing presentations, in writing code I need... but it can't help me actually talking to people, and will be of limited use at best in deciding if a result is actually correct or not. Interpretation is a human thing, and cannot be done objectively.

Finally, some of the skepticism I witness about AI seems to come from a very hardcore utilitarianist stance that confuses the ends and the means. "If it's not reasoning like a human", so it's claimed, "then it's not reasoning at all, so it can't be of any use". I fully agree it doesn't reason like a human : a silicon chip that processes text is not at all the same as a squishy human meatbag with hormones and poop and all kinds of weird emotions. Their might be an overlap, but only a partial one.

Yet... who cares if Searle can understand Chinese (I promise to read the original thought experiment eventually !) so long as he gets the answers right ? Or if not always right, then sufficiently accurate with sufficient frequency ? Just because the pseudo-reasoning of language models isn't the same as the madness that is the human mind, in no way means that they aren't of value.

According to some analyses of Dune, anything that makes us less human should be considered anathema - yet paradoxically the Dune universe is a cruel, brutal dystopia. Conversely, Musk and his ilk buy in to a ridiculous vision of a purely technology-driven Utopia, in which all problems are quantitative and informational, not social and economic. I say surely there is a middle ground here. I identify as a techno-optimist, in which developing new technology, new methodologies is intrinsic to the human experience, in which learning more things is an important component to (paraphrasing Herbert) the reality of life to be experienced. It's not the be-all and end-all but it can't be avoided either, nor should we try. Optimism is not Utopianism, preparation is not "drinking the Kool-Aid", and hope is not hype.

No comments:

Post a Comment

Due to a small but consistent influx of spam, comments will now be checked before publishing. Only egregious spam/illegal/racist crap will be disapproved, everything else will be published.